New article and new attempt to summarize my next experiments with Consul. In article below, you can find aggregated review, links, code and other materials. I created a simple demo and based on this staff I would like to describe my findings and thoughts.

Service Mesh implementation on top of Consul tech stack and other related topics with Envoy proxy and multy-datacenter installations left out of this story.

As well as enterprise version of Consul. No enterprise feature, only opensource service will be used.

The Goal

Very simple and stupid idea. I want to have test environment and ability to test Consul in different configurations and in different topology.

PoS. Structure, components, code, etc...

All source code is available in repository - https://gitverse.ru/aganyushkin/consul-demo

Structure:

consul-demo/

vagrant/ - vm configuration

ansible-playbooks/ - roles: consul, consul-client, consul-template, java-application, java-environment, load-balancer

consul-configurations/ - ACL configuration and app configs for lb, gw and service

demo-gateway/ - API Gateway based on spring cloud gateway. Java application

demo-service/ - Simple service. Java application

initialize.sh - Script which can create and initalize all component

destroy.sh - One script to ceanup environment

When all components will be created, initialized and configured you can find:

I've used parallels for virtualization on M processor. Details you can find here: Parallel provider notes

Also, due to M processor I used image (vagrant box) gutehall/fedora40 which is available for M series.

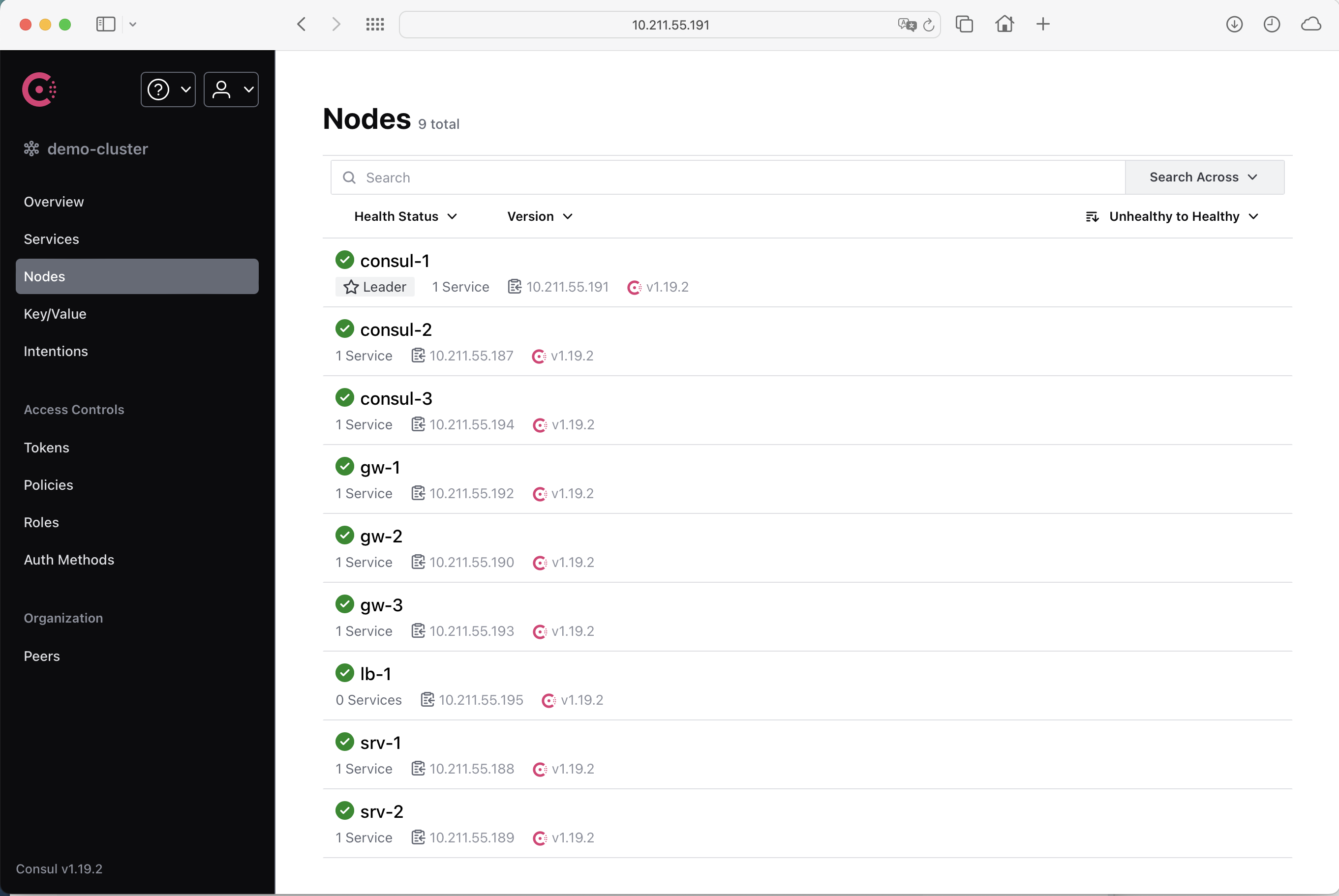

- Server status

cd vagrant

vagrant status

#Current machine states:

#

#consul-1 running (parallels)

#consul-2 running (parallels)

#consul-3 running (parallels)

#lb-1 running (parallels)

#gw-1 running (parallels)

#gw-2 running (parallels)

#gw-3 running (parallels)

#srv-1 running (parallels)

#srv-2 running (parallels)

#...

- List of server IPs

cat ./vagrant/inventory.list

#consul-1:10.211.55.191

#consul-2:10.211.55.187

#consul-3:10.211.55.194

#lb-1:10.211.55.195

#gw-1:10.211.55.192

#gw-2:10.211.55.190

#gw-3:10.211.55.193

#srv-1:10.211.55.188

#srv-2:10.211.55.189

IP list above is not static and in you environment it is will be different list.

- Access to vm shell for consul host

cd vagrant

vagrant ssh consul-1

- Consul UI

- Admin token for Consul UI

# in repo root

cat ./tmp/admin.token

How to start

Prerequisites

- Virtualization platform, and configured Vagrant provider for your environment.

I am using Parallels with parallels provider

and my VM definition seems like in example below, with

consul.vm.provider "parallels" do ...

...

N = 3

(1..N).each do |machine_id|

config.vm.define "consul-#{machine_id}" do |consul|

consul.vm.box = "gutehall/fedora40"

consul.vm.provider "parallels" do |p|

p.memory = "1024"

p.cpus = "2"

end

consul.vm.hostname = "consul-#{machine_id}"

end

end

...

- Private/public key in standard .ssh location into home directory.

public key will be injected into each VM and you will be able to connect to VMs through your common ssh tools and cli:

config.vm.provision "shell" do |s|

ssh_pub_key = File.readlines("#{Dir.home}/.ssh/id_ed25519.pub").first.strip

s.inline = <<-SHELL

echo #{ssh_pub_key} >> /home/vagrant/.ssh/authorized_keys

echo #{ssh_pub_key} >> /root/.ssh/authorized_keys

SHELL

end

-

git cli

-

OpenJDK

❯ java -version

openjdk version "22.0.1" 2024-04-16

OpenJDK Runtime Environment (build 22.0.1+8-16)

OpenJDK 64-Bit Server VM (build 22.0.1+8-16, mixed mode, sharing)

- VPN to have access to HashiCorp resources

let's try

Clone source code

git clone ssh://git@gitverse.ru:2222/aganyushkin/consul-demo.git

Start init script

cd consul-demo

# chmod +x initialize.sh

./initialize.sh

The following actions will be executed:

- Build java application: gateway

- Build java application: service

- Create and start all VMs with Vagrant

- Prepare inventory for ansible

- Start ansible playbooks to deploy required roles for each server

- Initialize Consul cluster with ACL and application (lb/gateway/service) configurations

- Pull access tokens (Consul ACL) from VM and save them into tmp location

get consul-server-ip: cat ./vagrant/inventory.list | grep consul-1

and go to http://<consul-server-ip>:8500

Main Consul features

Yes, right :) documentation you can find here.

Two key features, as I think: Service Discovery & App Configuration.

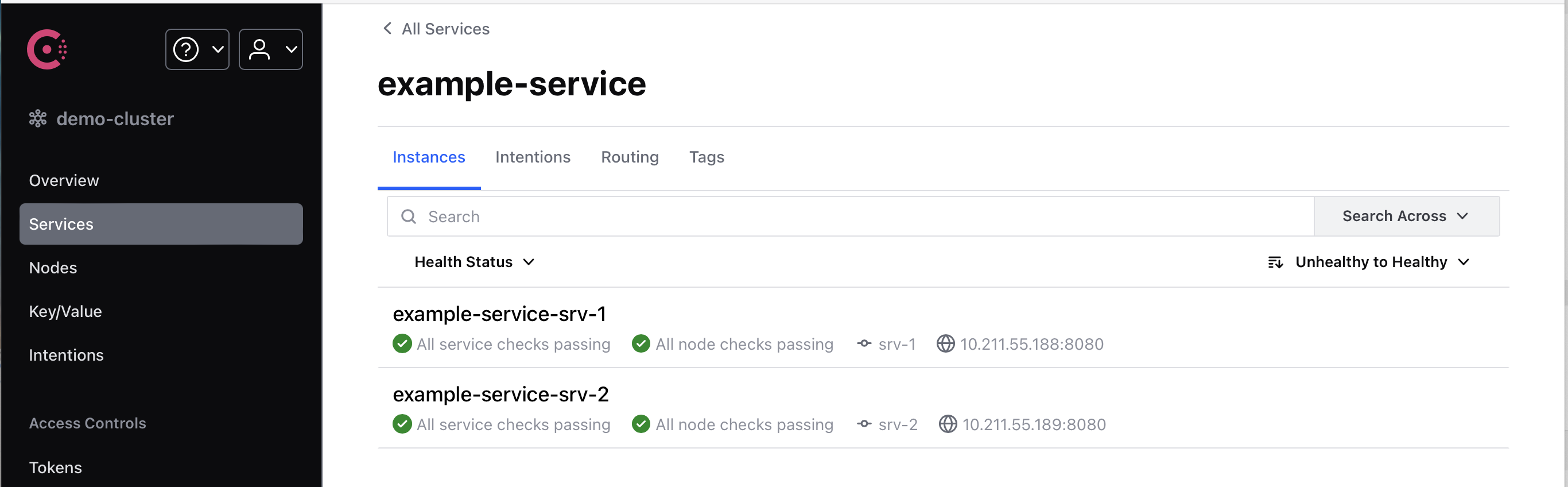

Service Discovery

Automated service discovery with Consul means what all our services will be added in special registry with availability status and coordinates (ip/port address).

From other side, our services can have only service id, connect to registry and get addresses for all live instances of the service. Of course services will be notified when availability status will be changed or new service instances will be added.

This approach allows us avoid manual configuration, errors related to this process and really simplify runtime environment configuration.

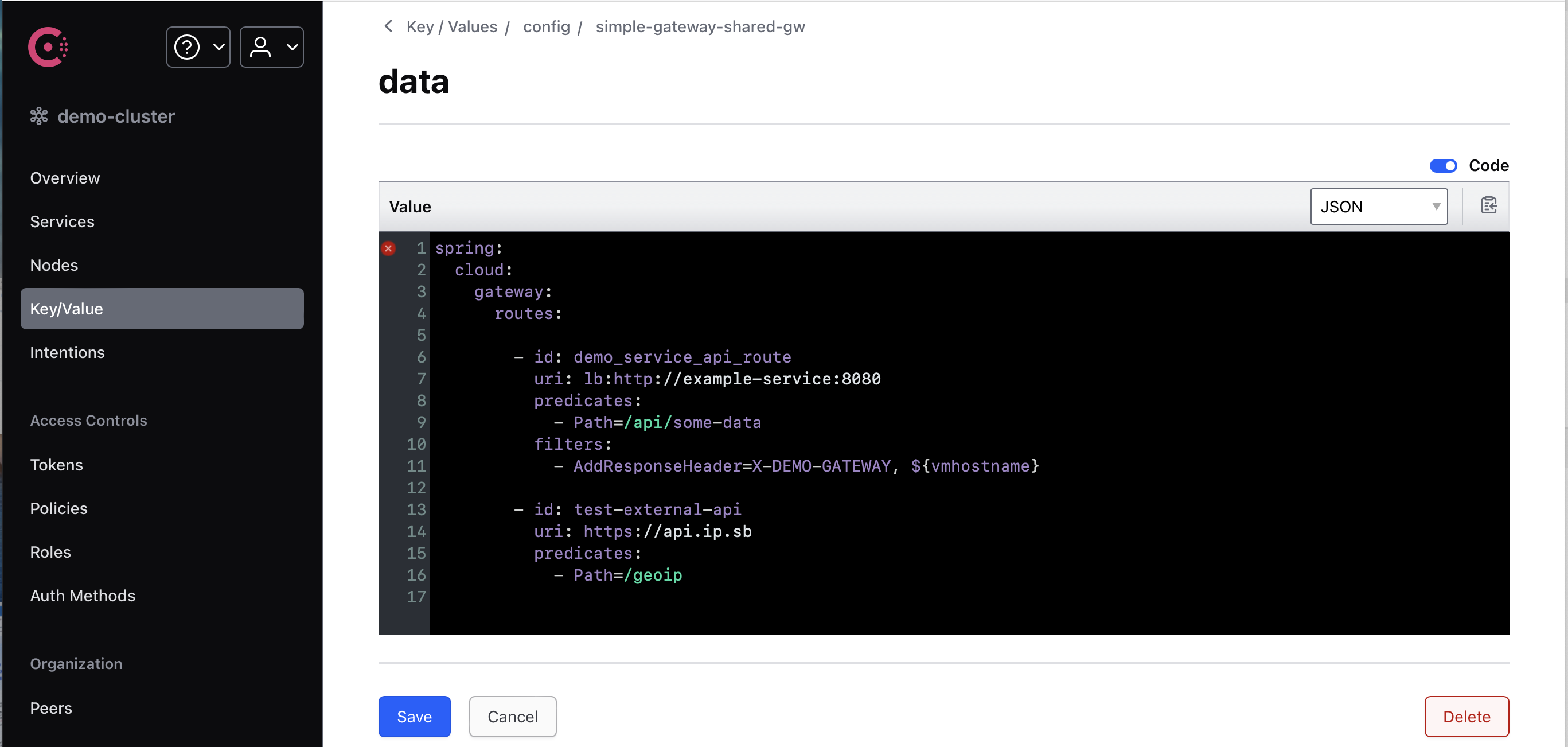

App Configuration

Consul has simple key value storage which can be used to keep application configuration files and have centralized management for it.

Application components in this case can have minimized configuration to set up consul connection properties.

For example, configuration in demo-gateway component. All other configuration, all routes can be moved into Consul store

spring:

application:

name: simple-gateway-${gatewayId}

config:

import: "consul:"

cloud:

consul:

host: ${CONSUL_ADDRESS}

port: ${CONSUL_PORT}

config:

enabled: true

fail-fast: true

prefixes:

- config

default-context: application

name: simple-gateway-${gatewayId}

profile-separator: '::'

format: YAML

data-key: data

watch:

enabled: true

delay: 1000

discovery:

instanceId: ${spring.application.name}_${vmhostname}

serviceName: ${spring.application.name}

heartbeat:

enabled: true

ttl: 10s

prefer-agent-address: false

prefer-ip-address: true

server:

port: ${GATEWAY_PORT}

And configuration which will be stored in Consul key/value storage:

spring:

cloud:

gateway:

routes:

- id: demo_service_api_route

uri: lb:http://demoService:8080

predicates:

- Path=/api/some-data

filters:

- AddResponseHeader=X-DEMO-GATEWAY, ${vmhostname}

- id: test-external-api

uri: https://api.ip.sb

predicates:

- Path=/geoip

In demo examples I have used java Spring Boot framework with spring-cloud-consul to organize connections from application to consul client agent.

For load balancer which based on Nginx server I used consul-template component. This component can get data from consul client, generate configuration file by template and restart target service to apply new configuration. It is interesting workaround if you can't use embedded library to organize connection with Consul.

template {

source = "/var/lib/consul/templates/nginx.ctmpl"

destination = "/etc/nginx/nginx.conf"

command = "systemctl reload nginx"

command_timeout = "10s"

error_on_missing_key = false

backup = true

wait {

min = "2s"

max = "10s"

}

}

consul-template can get data from key/value as well as from discovery registry. It is important, we can use in templates both types of data.

...

upstream gateway-upstream {

least_conn;

{{- range service "simple-gateway-shared-gw"}}

server {{.Address}}:{{.Port}} {{ $instanceId := .ID -}} weight={{ keyOrDefault (print "properties/lb/weights/" $instanceId) "1" }};

{{- end}}

}

...

In Consul UI we can manage all configurations directly. After saving, the new configuration will be applied to all listened instances.

Of course, Consul has cli tool and Key/Value storage can be managed through cli.

Consul architecture

Now, time to describe how Consul works.

Very good arch review in this documentation:

- Consul Architecture on developer.hashicorp.com

- Consul reference architecture on developer.hashicorp.com

- and some notes in my blog

but, in general terms:

Consul cluster - it's set of Consul binaries which started in consul server mode. Each server can interact with other servers, keep the data in a consistent state and provide high availability.

Consul cluster uses Raft to provide consistency and leader election in the cluster. Also, gossip protocol uses for to manage membership and broadcast messages to the cluster.

Consul client - it is second type of consul agents. These agents are connected to cluster but not involved in leader election and data consistency guaranties. These components placed next to the application components, can interact with all cluster nodes, provides data for app components and organize health checks.

Our services are connected to Consul Clients over the http protocol and get data from them.

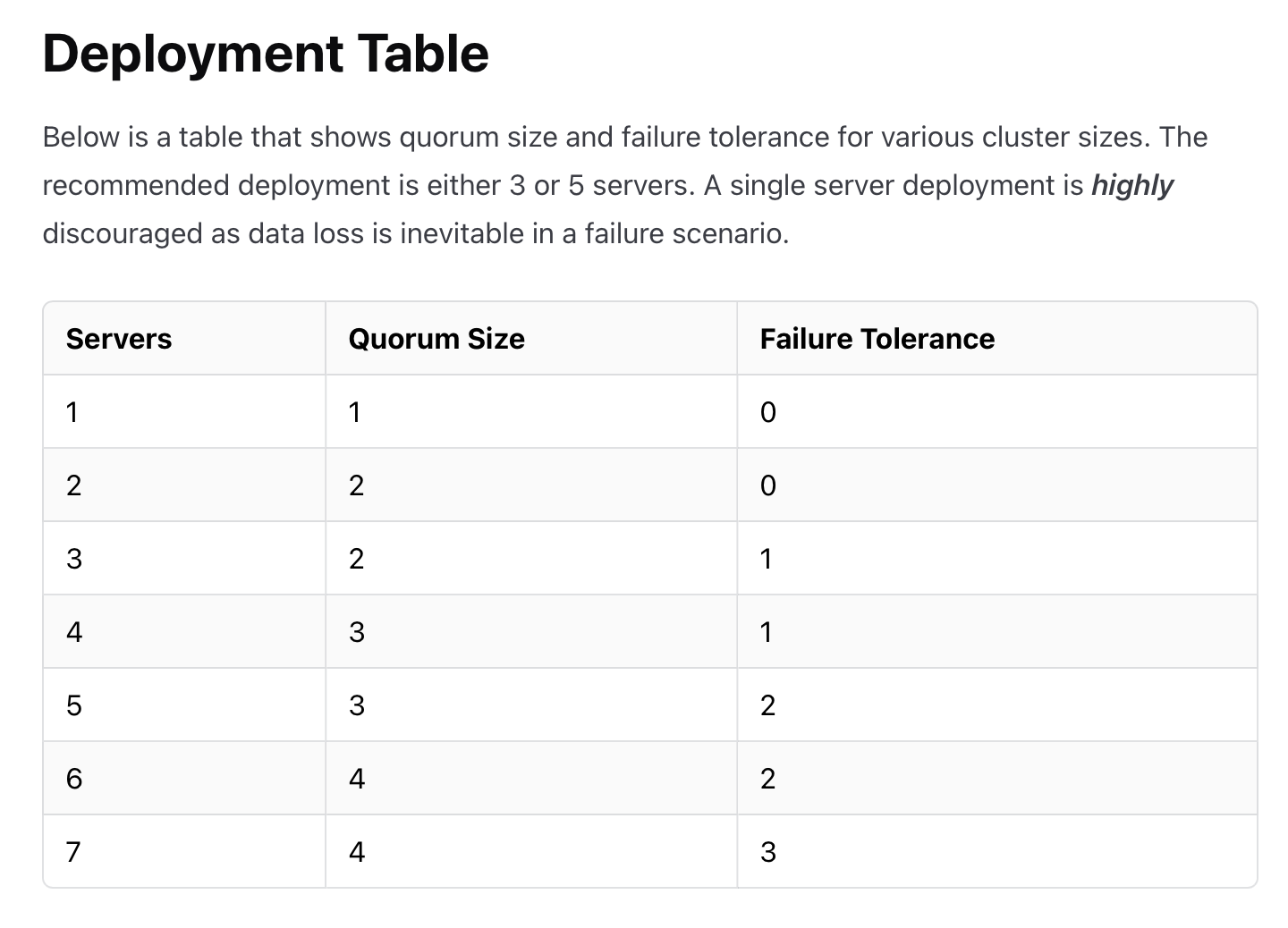

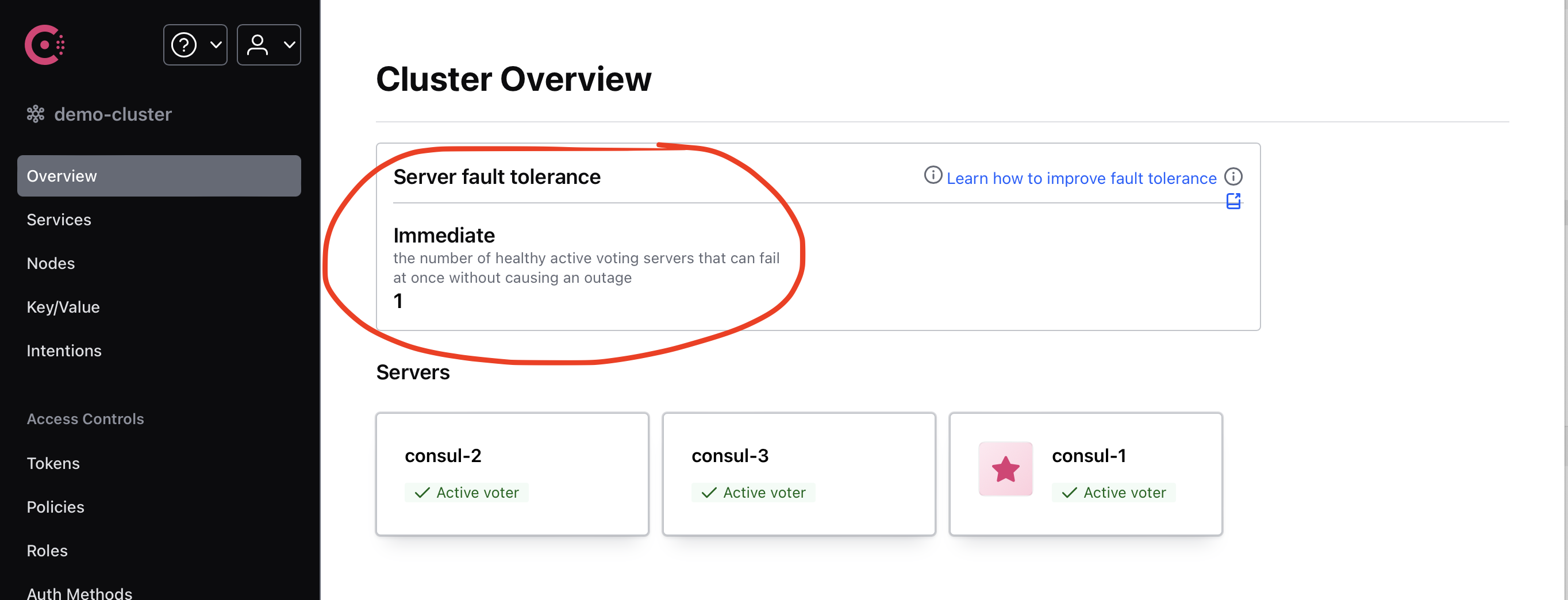

Failure tolerance

again, some docs: https://developer.hashicorp.com/consul/docs/architecture/improving-consul-resilience

Main idea, Consul cluster should be created with redundancy. When one or two or more servers fails cluster can continue to work.

Most important table in failure tolerance topic:

In described environment I've created cluster with 3 server nodes. In this configuration one server can be dropped without any effects for consumers.

Security

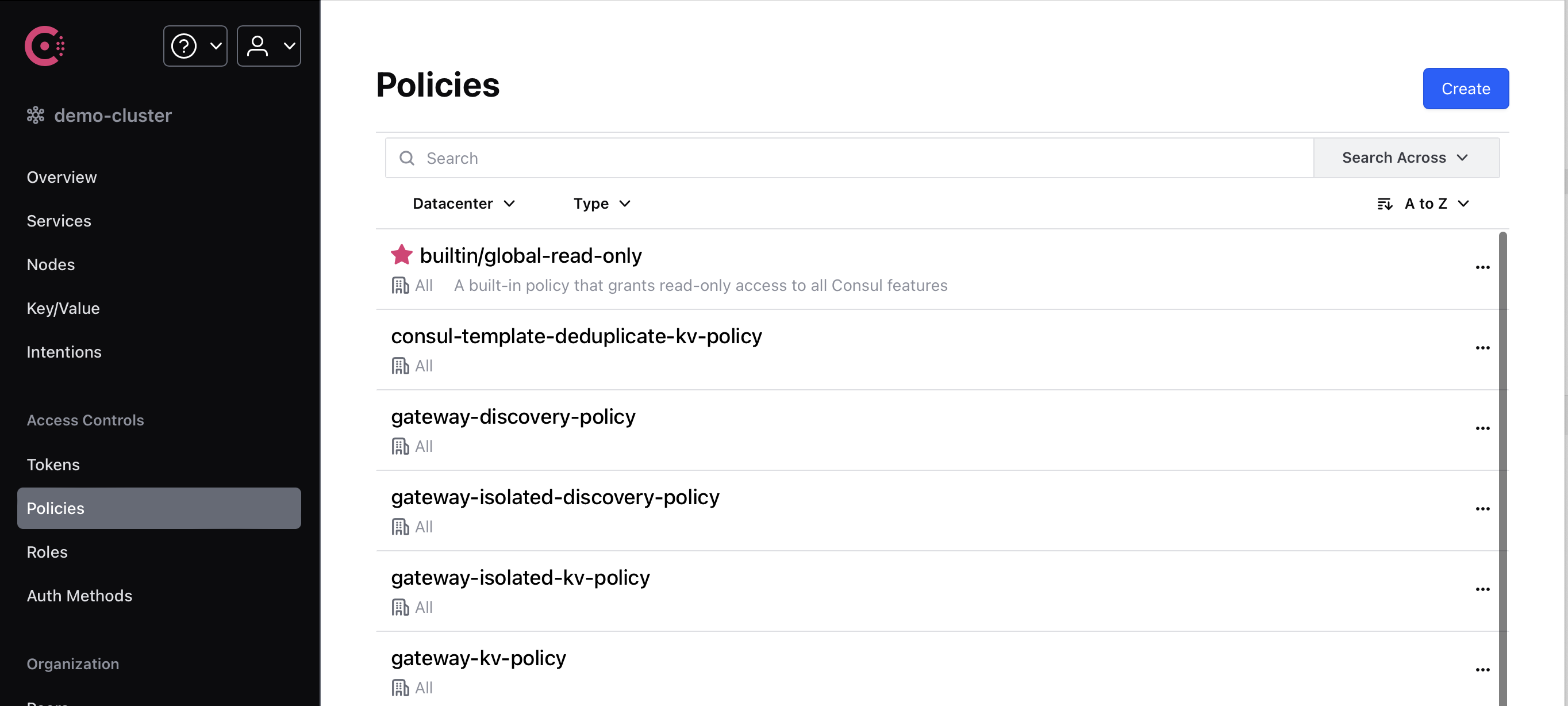

ACL for UI and internal needs

Access Control List (ACL) overview

In Consul we can activate ACL and configure individual access tokens for all users and systems with different rights.

For each access token in Consul we can configure access very granularly up to key in key/value store or service property in discovery registry.

Core ACL concepts:

- Policies - is a set of rules that grant or deny access to resources in the network

- Roles - A role is a collection of policies that your ACL administrator can link to a token

- Tokens - ACL tokens are the core method of authentication in Consul. Can be assigned with policies or role

Policy for LB component, allows access to LB configuration and weight properties in key/value store

key_prefix "properties/lb/weights/" {

policy = "read"

}

key_prefix "properties/lb/configuration/" {

policy = "read"

}

Next policy for LB allows access to discovery data

session_prefix "lb" {

policy = "write"

}

node_prefix "lb" {

policy = "write"

}

service "simple-gateway-shared-gw" {

policy = "read"

}

node_prefix "gw" {

policy = "read"

}

CLI Consul command can be executed with active ACL. In this case we should pass access token with other arguments:

# access acl token is stored in file ./acl/admin.token

consul catalog nodes -token-file ./acl/admin.token

#Node ID Address DC

#consul-1 ee46e2f6 10.211.55.191 demo-cluster

#consul-2 7951fd04 10.211.55.187 demo-cluster

#consul-3 834e969b 10.211.55.194 demo-cluster

#gw-1 31fd10e3 10.211.55.192 demo-cluster

#gw-2 bc093a00 10.211.55.190 demo-cluster

#gw-3 5dc91557 10.211.55.193 demo-cluster

#lb-1 ce59f9c5 10.211.55.195 demo-cluster

#srv-1 9c2a8eeb 10.211.55.188 demo-cluster

#srv-2 9a09c23e 10.211.55.189 demo-cluster

Token also can be passed in cli directly

-token=<value>

ACL token to use in the request. This can also be specified via the

will default to the token of the Consul agent at the HTTP address.

In this example you can find initialization shell script which creates different tokens with different roles and policies and save tokens for them.

# Create policy

consul acl policy create -token-file ../admin.token -name "service-discovery-policy" -description "" -rules @service-discovery-policy.hcl

consul acl policy create -token-file ../admin.token -name "service-kv-policy" -description "" -rules @service-kv-policy.hcl

# Create role

consul acl role create -token-file ./admin.token -name "srv-component-role" -description "" -policy-name "service-discovery-policy" -policy-name "service-kv-policy"

# Create Token

consul acl token create -token-file ./admin.token -description "token for service" -role-name "srv-component-role" > srv.token.output

Consul agents (clients) must be configured with right tokens. It can be achieved with following properties:

acl = {

enabled = true

tokens = {

default = "{{ consul_client_acl_token }}"

}

}

Zero Trust networks

Zero Trust in my article it is not about service mesh with consul, it is about network connectivity, firewall rules and so on. About environment for Consul where we should allow only trusted network connections.

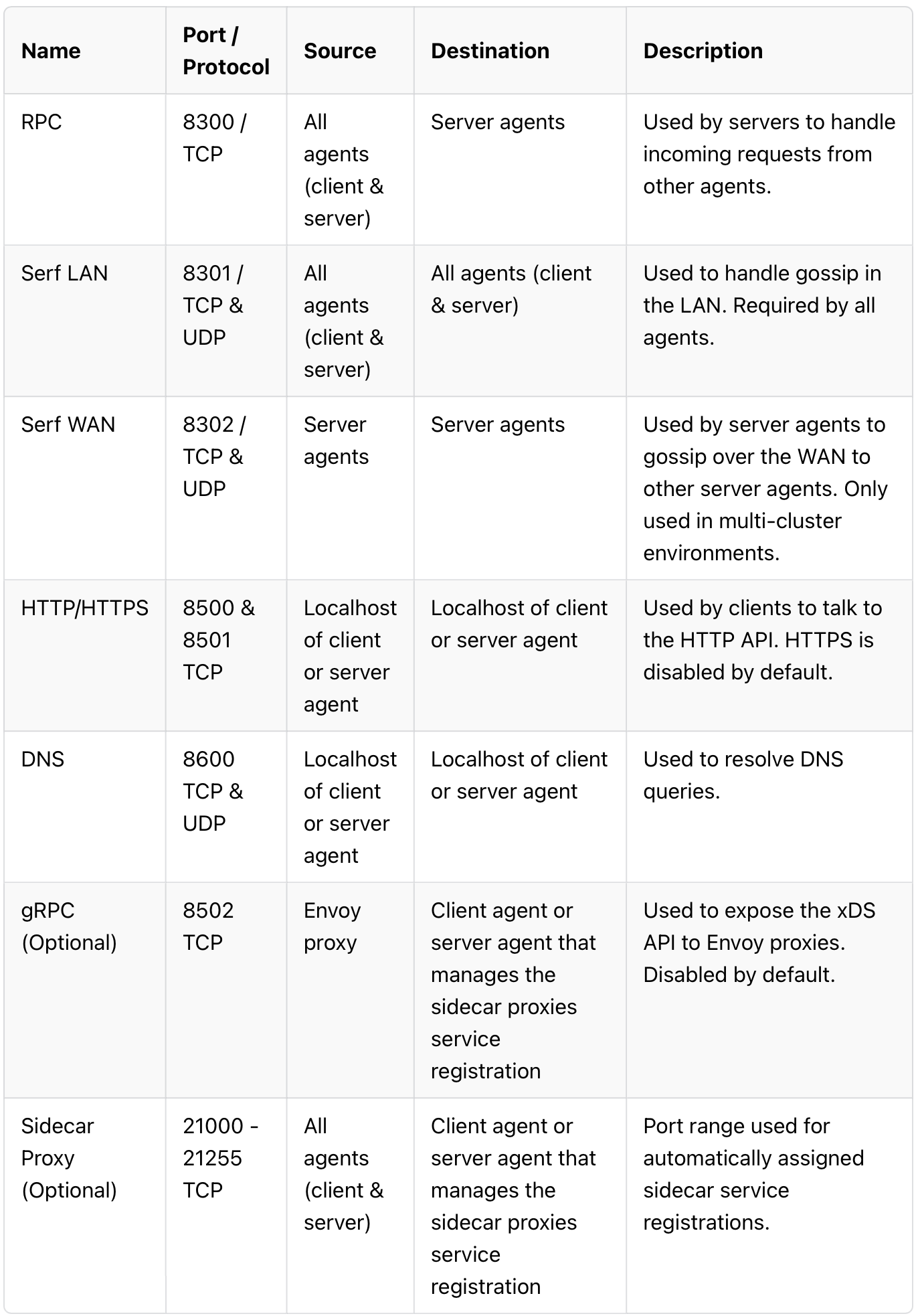

In Network connectivity sections we can find all required ports which we should keep open between different components:

In my demo project I haven't added any network restrictions, but it is possible to do because we are in test environment. That's what it's designed for...

Activate firewall in fedora:

sudo systemctl enable firewalld

sudo systemctl start firewalld

more details about firewall - here in my notes

Now, firewall should drops all incoming tcp/udp requests and after restart we will have broken cluster.

Next step, lets create rules for firewall. According connectivity table above we have:

sudo firewall-cmd --add-port=8300/tcp

sudo firewall-cmd --add-port=8301/udp

sudo firewall-cmd --add-port=8301/tcp

sudo firewall-cmd --add-port=8500/tcp

sudo firewall-cmd --runtime-to-permanent

Problems with Consul

- Service discovery feature very sensitive to network quality. Short-term failures and packet loss annihilate all beneffits from service discovery.

- No versioning capabilities in Consul Key/Value storage this creates problems in use this storage as primary for configuration.

- Some interesting features are only available in the enterprise version. Authentication OIDC, Network Segments, etc...